Preface

Happy new year fellow developers! The speak I did at the Chicago Python User Group was a huge success and I hope everyone enjoyed it. I’d like to start a series of blogs which will cover a number of subjects related to PyPDFForm. These subjects will hopefully cover some of the more in depth technical details that I didn’t get to cover during my speak.

In this first blog, I want to talk about all the CI/CD pipelines I have set up for the project that ensured its stability and automation. These pipelines are also in my opinion quite general purpose and can be used by any Python project in the future.

Test Automation

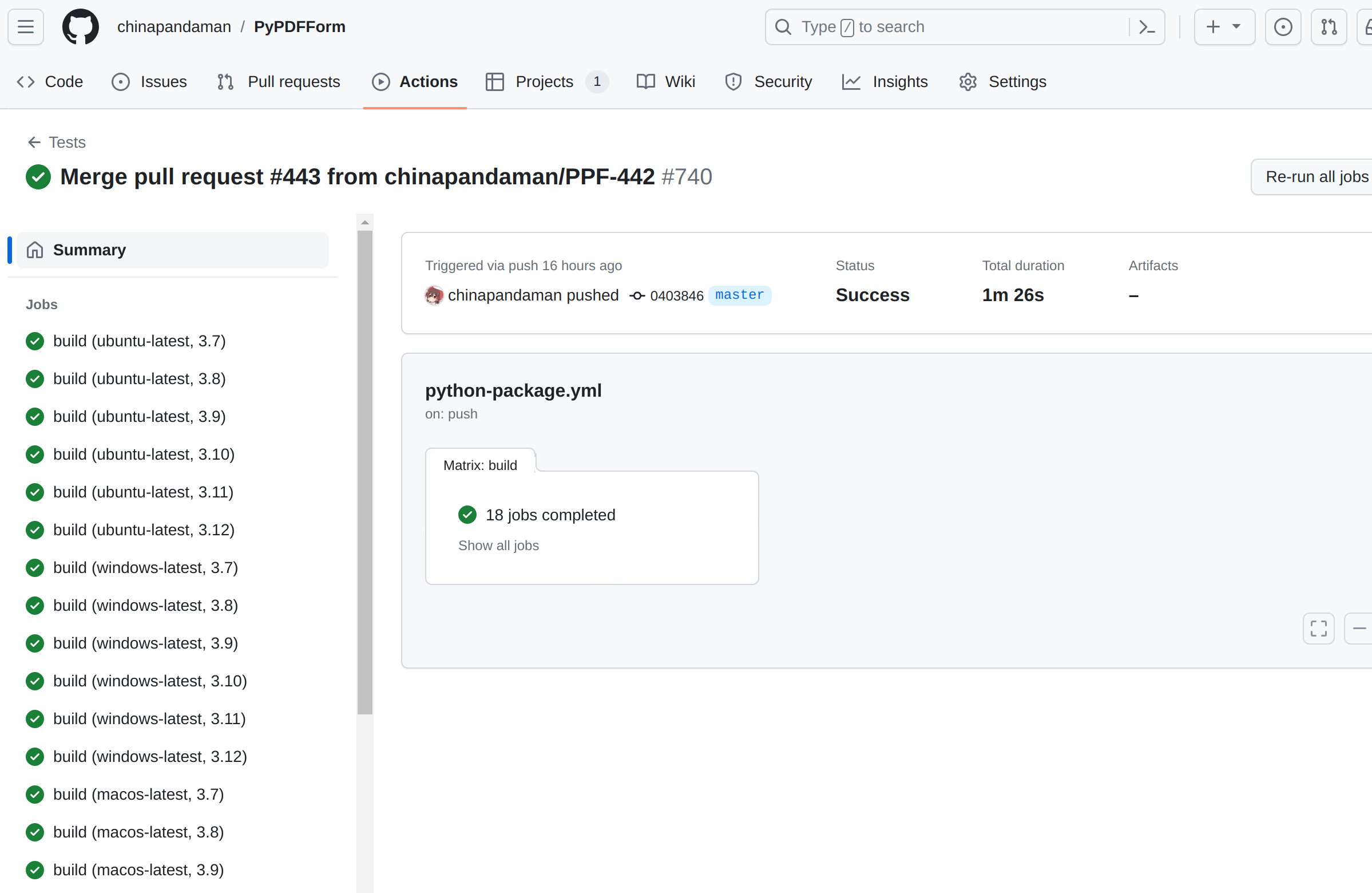

PyPDFForm uses GitHub actions for all its CI/CD pipelines. When it comes to continuous integration, the first one that pretty much any project should have is build and test automation. PyPDFForm implements all its tests using pytest and since Python is an interpreted language it doesn’t need a lot of build steps other than downloading dependencies. The following action defines the pipeline for PyPDFForm’s test automation:

1 | name: Tests |

Let’s look at this block by block to get a better understanding of it. First:

1 | on: |

This chunk defines the condition that triggers this action. In this case we want to run our tests when there’s a PR created or if a commit is made directly towards the master branch.

This next section defines all the environments that the tests should be run on:

1 | runs-on: ${{ matrix.os }} |

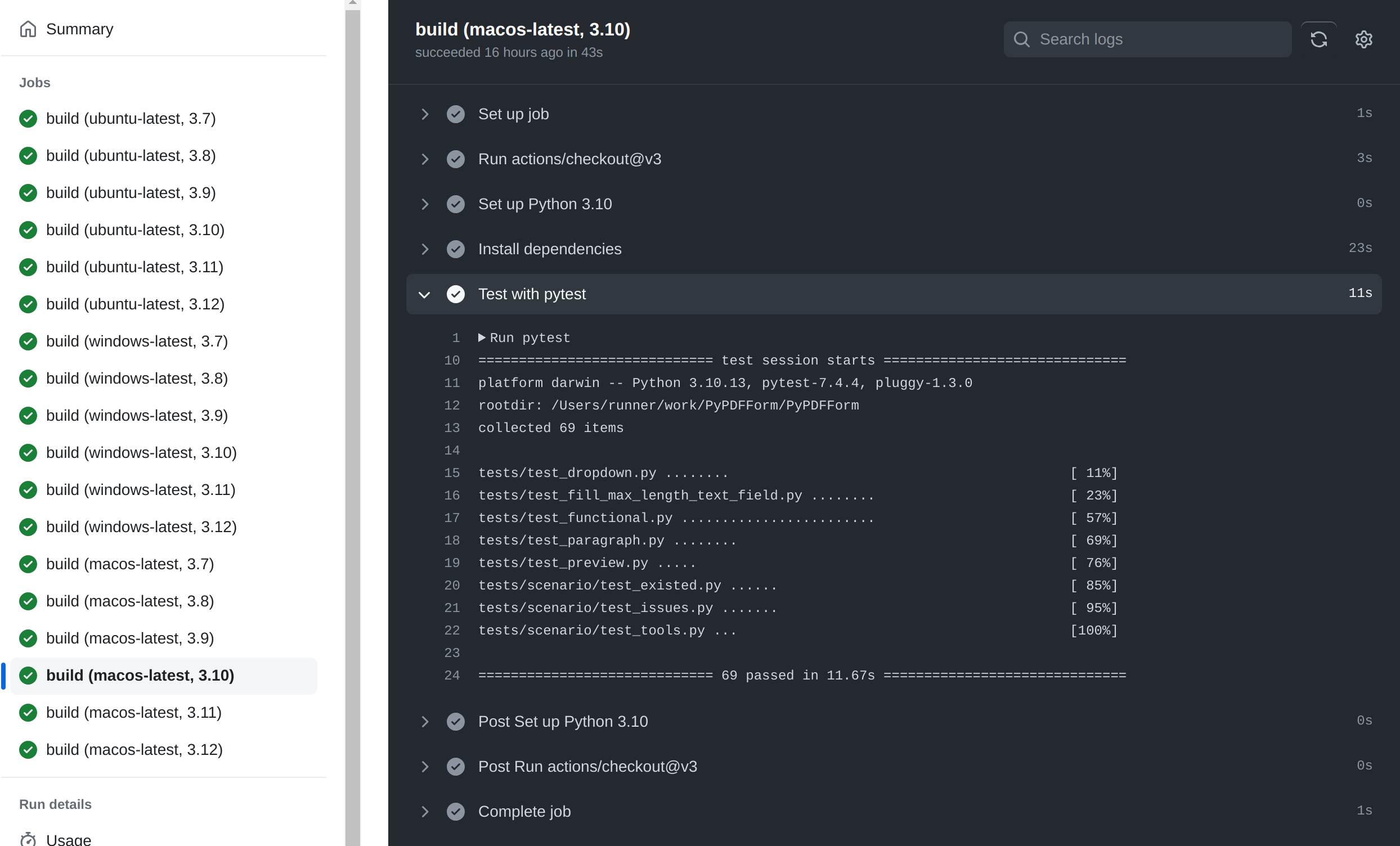

GitHub action has a very nice way of defining environments with the matrix strategy. In this case I would like to test PyPDFForm on all three operating systems. For each os I want to test all the currently supported Python versions. Overall these environment definitions will kick off 3x6=18 different builds when this action is invoked.

Now the actual pipeline itself is divided into four steps. The first two steps simply checkout the source code and setup the Python environment, both of which use other existed actions:

1 | - uses: actions/checkout@v3 |

Note the python-version references the python-verion environment definition from the matrix.

The next step is to install the dependencies. Python unlike some other compiled languages doesn’t need a build process most of the time. So a simple pip install is enough for this step:

1 | - name: Install dependencies |

And finally we get to actually run the tests, which is just a simple pytest command:

1 | - name: Test with pytest |

Now we have a pretty standard action for running automated tests.

Code Coverage

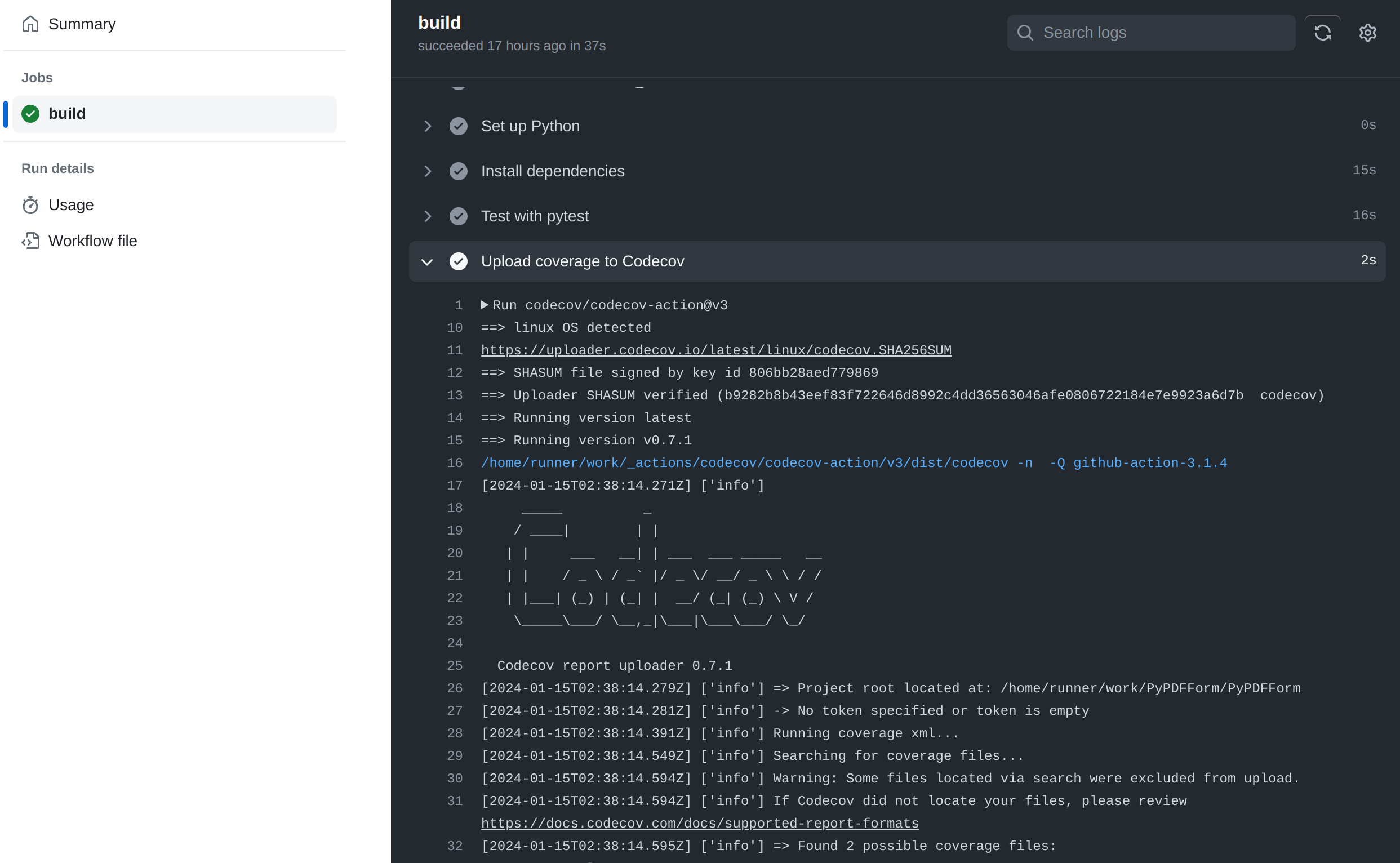

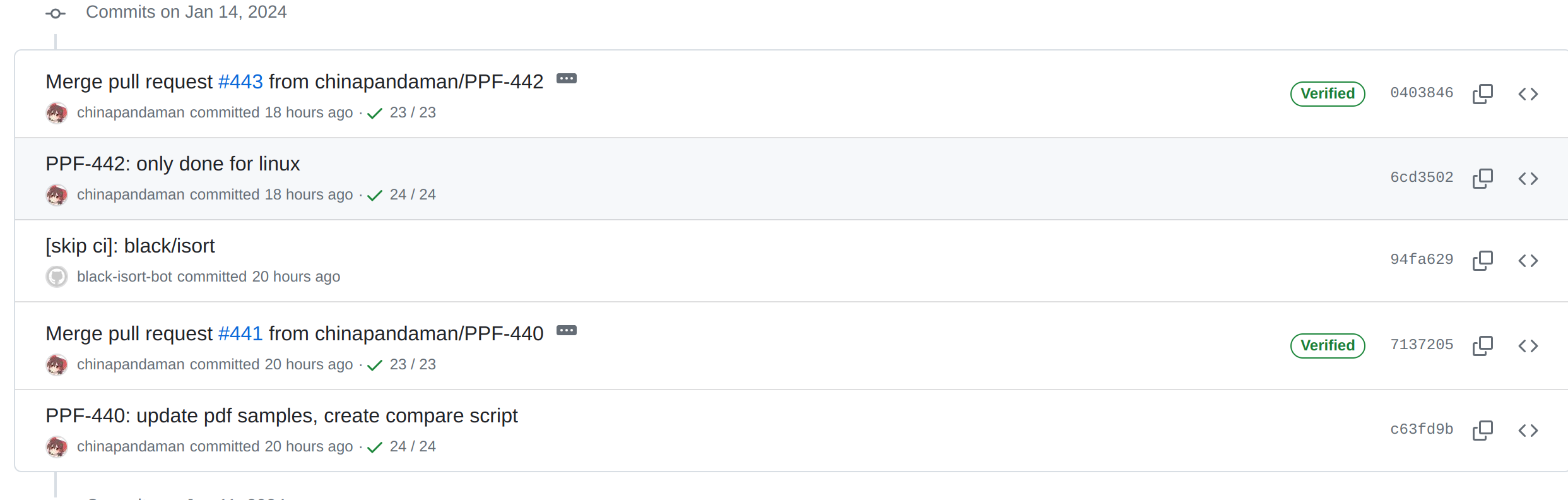

The action for code coverage generation used to be part of the test automation action discussed in the last section. It was changed and became its own action in this PR.

The reason behind this is exactly what the title of the PR says. It is meant to reduce the number of times code coverage is uploaded to Codecov. There seems to be an issue either on GitHub or Codecov where too many coverage uploads will start causing API rate limits, described in this issue.

With the old way, every time the test action is run there will be eighteen submissions of coverage to Codecov and it has caused me the above issue. So now code coverage is its own separate action:

1 | name: Coverage |

This action is very similar to the test automation action, with two major differences. First:

1 | runs-on: ubuntu-latest |

1 | - name: Set up Python |

With the above environment definition, this action will only run once on the latest version of Ubuntu, using the latest version of Python. This means it will only upload the code coverage once, having a much less chance of triggering the rate limits.

And finally there’s an extra step after the tests are run that uploads the coverage to Codecov:

1 | - name: Upload coverage to Codecov |

It is also worth noting that PyPDFForm does integrate the Codecov GitHub app to better work with this action.

In the future if the rate limiting issue does get resolved, this action will likely be re-merged back to the test automation action.

Code Formatting

PyPDFForm uses two methods to ensure code quality. The first one is the automated code formatting action. Python has two great code formatting tools: black which formats all Python files in your source code, and isort which fixes all your import orders.

PyPDFForm makes use of black and isort with the following action:

1 | name: Code Formatting |

Unlike the test automation action, this action only runs when a commit is made to the master branch:

1 | on: |

This is to ensure that none of the automated formatting will mess up diffs between branches and master in PRs.

1 | - uses: actions/checkout@v3 |

The checkout source code step of the pipeline now makes use of a secret token called BLACK_ISORT_TOKEN. This is actually a GitHub developer token of mine stored under the repository’s secrets. We will talk about why it’s needed later on.

To actually use black and isort, obviously they will need to be installed:

1 | - name: Install black/isort |

And finally, the following chunk gets executed:

1 | - name: If needed, commit black/isort changes to the pull request |

In this step, the following happens in order:

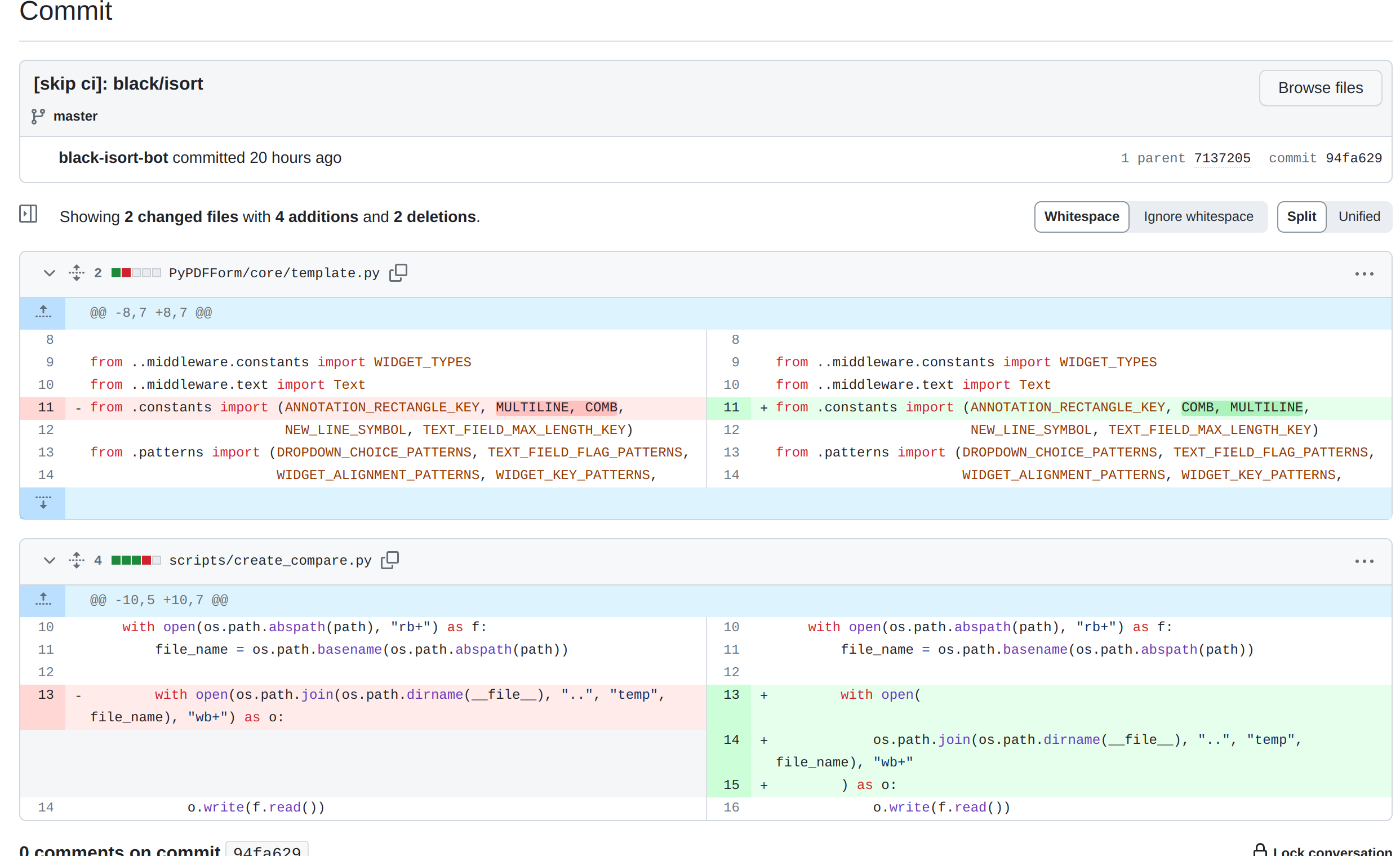

blackis run on all Python files.isortis run on all Python files.- Some

gitconfigurations are set so that a commit can be made. - Two

git diffcommands are run to determine if any file is modified as a result ofblackorisort. If so a new commit is made with modified files. - The commit is pushed to

master.

Note the commit message starts with [skip ci]. This way it will not trigger any new action after being pushed to master. This is intended as this commit only has code formatting changes.

Now you can see why the GitHub developer token needs to be setup when we initially checkout the source code. The credential is needed when we push the code formatting changes back to master.

Above you can see an example that shows the outcome of this action.

Static Analysis

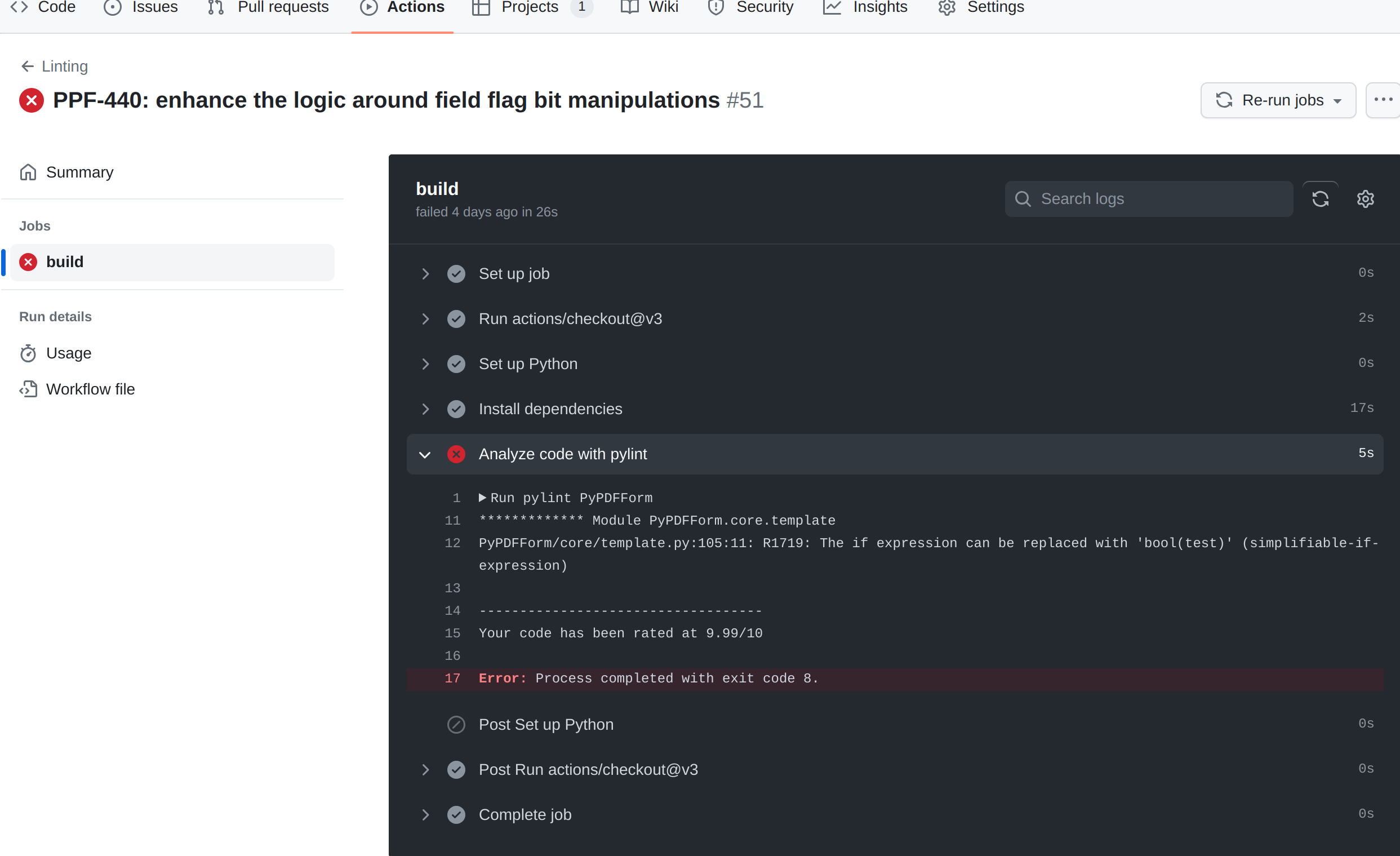

In conjunction with the code formatting action, the following action utilizes pylint to statically analyze the project to cover some loopholes black might miss:

1 | name: Linting |

This action is 90% the same as the test automation action except the following:

1 | - name: Analyze code with pylint |

Instead of running tests with pytest, it runs pylint on the source code for a static analysis.

pylint, paired up with black and isort, will ensure that PyPDFForm always has a clean, but more importantly a unified code style, regardless how each developer’s own coding style varies.

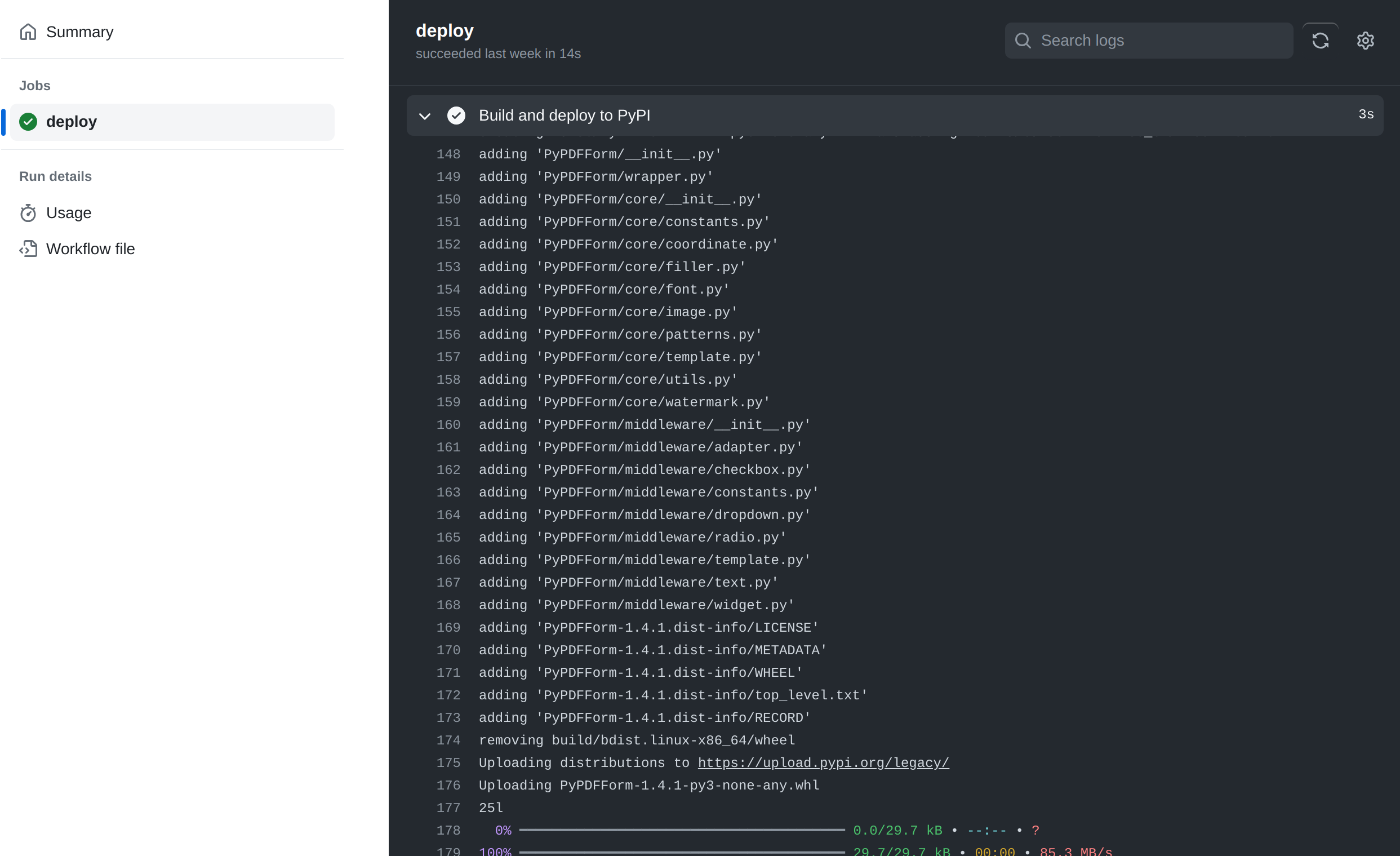

Deploy

So far we have only covered continuous integrations of PyPDFForm. It’s time to talk about the action that allows it to be continuously deployed:

1 | name: Deploy |

This action, unlike any other action we have discussed so far, is triggered when a release is made:

1 | on: |

The first few steps of the pipeline is quite similar to the other ones, checkout the source, setup Python, etc. The part we care about starts here:

1 | - name: Install dependencies |

The above will install setuptools, wheel, and twine, all of which are dependencies we need to package and deploy our projects.

Finally, this is where the actual deployment happens:

1 | - name: Build and deploy to PyPI |

It first packages the project based on the setup.py following this guide, and then uploads the packaged files to PyPI.

Note that this step uses two environment variables: TWINE_USERNAME which is set to __token__, and TWINE_PASSWORD which references a secret called PYPI_PASSWORD. These two variables configure the credential needed for PyPI and are what allow the final upload to happen.

It is also worth noting that this step will need to be changed soon, as packaging by calling setup.py directly is deprecated.

Conclusion

Some of these actions were created from a while ago and it is quite possible that GitHub actions and Python suggest new best practices. But I think this article highlights a skeleton of how some CI/CDs can be done for Python projects and I hope anyone who is reading find this helpful.